Project Summary

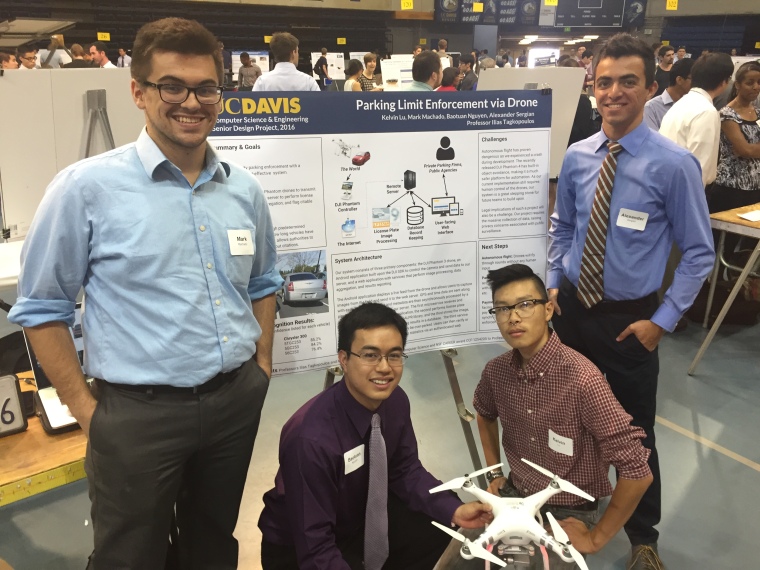

We’ve created a two part system to allow DJI Phantom 3 drones to be used for parking enforcement. The first component, the ‘Patrol’ Android application allows the Phantom 3 send images (along with GPS, and timestamp) to our server, which is the 2nd component of this project. The server runs license plate recognition, data aggregation upon the results, applies enforcement logic, and finally runs a web interface for users to view and process citations. Our system has been made robust with authentication to ensure only approved drones can submit evidence to the system.

Users are able to access our user interface to view and process citations at: http://taglabrouter.genomecenter.ucdavis.edu/webservice/

Resources:

- All project code can be found at: https://github.com/quadsquad193/phantomboreas

- User manual can be found at: https://www.dropbox.com/s/syu4eotxcqmv218/QuadcopterUserManual.pdf?dl=0

- Patrol Android application apk could be found at: https://www.dropbox.com/s/oodbwicxhjcack4/app-debug.apk?dl=0

- Project Overview Video: https://www.youtube.com/watch?v=6PEZUbAusp0

Thanks again to Professor Tagkopoulos and Professor Liu for their guidance and support.

-Baotuan, Kelvin, Mark, and Alex